Authors: Ramon Sanchez-Iborra, Rodrigo Asensio-Garriga, Gonzalo Alarcón-Hellín, Antonio Skarmeta

Organization: University of Murcia

In the near future, 6G networks are expected to function as a single, shared system among various stakeholders. The role of the customers will shift to that of providers as physical and virtualized infrastructures will be ready to host any type of application or service. These new scenarios are largely made possible by virtualization techniques, particularly in lightweight forms, as well as agile resource handling, reallocation, and migration. Thanks to intelligent resource and task management, service provisioning will effortlessly take advantage of a variety of infrastructure capabilities, with its components self-adapting and self-configuring to improve their performance.

The purpose and nature of the services offered in these dynamic scenarios differ based on the application domain. Computation tasks should be appropriately adapted or instantiated along a seamless chain, considering the network performance and the processing device capabilities, within the fog-edge-core-cloud continuum. Furthermore, in devices with limited resources, like those powered by batteries, power and processing usage are particularly important. In order to overcome this difficulty, task offloading in 6G networks becomes a crucial enabler. By utilizing the ability and flexibility to choose the optimal node to carry out a specific task, task offloading enables dynamic service handling. In this regard, as was already mentioned, the decision-making process that drives these mechanisms is influenced by the requirements and nature of the service as well as the features of the infrastructure’s available resources. The primary beneficiaries of task offloading in terms of enhanced experience are service consumers, in line with the user-centric nature of 6G networks. In terms of computational nodes, task offloading supports devices with limited resources by performing the computational tasks decreasing device’s power consumption, while cloud nodes use the offload to reduce network load and end-to-end latency. In this line, specialized AI engines need to be designed to natively use information coming from data, services, requesters, and available resources aiming to accomplish efficient task offloading. These engines seek to address the three primary questions of “what,” “where,” and “when” to offload a given task. For example, being close to the end-users is crucial in low latency scenarios; however, affinity decisions should be contemplated for other capabilities, such as intensive computation, in order to enable flexible orchestration placement for load balancing purposes.

NANCY proposes novel offloading techniques that make use of the user profile to effectively and dynamically forecast the user’s future needs and movements. This strategy enables proactive system performance optimization and needs anticipation. This is accomplished by continuously monitoring each user’s service requests, which yield important insights into the user’s preferences and behavioral patterns. Furthermore, these AI-model-based offloading mechanisms allow for real-time network condition adaptation, allowing for more effective resource management and system performance optimization. They enable dynamic adjustments to offloading procedures, which promote system scalability and resource management flexibility.

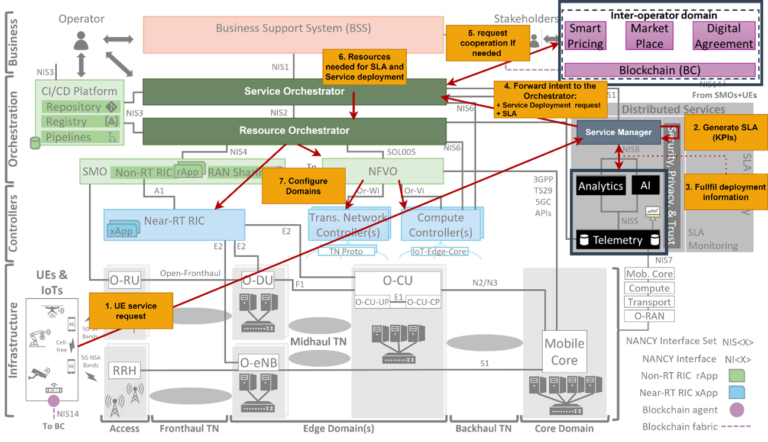

NANCY’s offloading workflow is presented in Figure 1 below. The process begins when the UE contacts a service manager in the NANCY architecture to request a specific service (1). After confirming that the UE subscription has access to such a service, the service manager that receives this request creates the necessary requirement specifications for the service to be deployed and running properly. This is done thanks to a Service Level Agreement (SLA) format also defined in NANCY (2). The service manager asks the Artificial Intelligence (AI) blocks to decide where and how to deploy the requested service based on the Key Performance Indicators (KPIs) in the SLA (3). The service orchestrator receives the deployment decision (4) and decides whether the service can be deployed in the local operator while maintaining the KPIs in the SLA. The service orchestrator sends the service request to the marketplace to see if another operator can assist in providing the service if the local operator is unable to meet the SLA (5). When the final enforcement plan is prepared (either by the local operator or by an external one) (6), the corresponding service orchestrator requests its attached resource orchestrator to configure each of the various domains involved in the service provisioning (7).

Figure 1 NANCY’s task offloading workflow

In particular, the marketplace module, which controls the publication and use of resources from other operators, handles the service provisioning request in the case that the local operator is unable to meet the service’s requirements as stated in the SLA. Thus, the smart pricing module, which assesses the prices and resources offered by various network operators, receives the request from the marketplace. Once the selected operator, with an associated price, is automatically determined by this block, it creates a new SLA and forwards it to the service orchestrator of the operator that will provision the requested service. The SLA is also transmitted to the Digital Contract Creator module, which adds more business details to the SLA (involved parties, price, etc.). This process creates a digital agreement, which is then submitted and recorded in the NANCY blockchain via the marketplace module. Once this process is finalized, all the involved stakeholders are informed, and the offloaded service can be provisioned and exposed to the user.

This workflow, which has been conceptually defined over a B5G infrastructure, will be instantiated through the different NANCY demonstrators and testbeds during the second half of the project, to be validated and evidence its suitability to handle many kinds of service offloading requests in complex network environments.