Authors: Giorgos-Nektarios Panayotidis, Theofanis Xifilidis and Dimitris Kavallieros

Organization: Centre for Research and Technology-Hellas

It’s been noted before that wireless communications and Informatics have been progressing by far superseding each and every other science! Some 30 years ago, the first commercial user workstations functioned well below 24 Mbps. Today, as 5G moves towards mass commercial adoption, it’d be reasonable to expect that contemporary bit rates, along with other performance indicators, go through the roof; indeed, B5G/6G promises a bedazzling 100 Gbps, at least!

It was the advent of wireless communications that brought this unprecedented speed and capacity, which will need to accommodate not just standard PCs but all the ever-growing, currently in the billions, Things going online via IoT! The 5G generation beyond networks targets improved data rate achievement along with reduced delay in the context of collecting, processing and communicating large volumes of diverse data. This isn’t without its downsides and limitations, though, such as continuing a long trend of cell densification: coverage and, overall, a seamless experience without interruptions are promises upheld via continuous alterations in the access points that our cell phone may connect to. Now, this is where throughput forecasting comes into play!

In this context, throughput measures the practically achievable rate that is the result of the interplay of many parameters. Throughput is particularly sensitive and may massively fluctuate in a setting such as those we’ve described, with users’ equipment (UEs), such as mobile phones, connecting to largely autonomous base stations. It is the diversity of the services and the required dynamic QoS that calls for smart mechanisms with not only a reactive but also a proactive character; this is where forecasting services join forces! In this way, we have a so-called anticipatory networking tool, where we can efficiently tackle network events and change decision-making in networking for the better.

Predicting the coverage and service rates (i.e., actual bit rates or throughput) that different access points in the users’ proximity can offer is key for letting users adapt to the highly dynamic conditions of these networks. Prediction of radio coverage in mobile cellular networks is not a new concept (recall, for instance, the soft handovers of 3G networks, which monitored and predicted the quality of radio signals from multiple base stations). However, the fast growth of AI tools and techniques sets a solid foundation for enhancing the accuracy of prediction tasks and practicing prediction also for higher-layer performance metrics, such as user throughput. Especially, machine learning has proven to be a viable methodology for addressing complex and computationally demanding optimization problems in the context of large control signalling overhead and required dynamic network user Quality of Experience (QoE).

Our Contribution

Let us for a moment think about where this world has come to: it is the age of advanced stage of the famous telematics revolution (Industry 4.0): we now have mass digitization and also ubiquitous electrification. However, the greener alternatives the latter provides could be disrupted by power outages and, what’s more, such an event could in actuality barely be made up for by renewable (solar, wind) and alternative (e.g., bio-diesel) energy sources. However, something better could be done for the network outages; these outages, as we’ll see, inherently bear a very similar but also broader meaning.

Figure 1. A network outage utility is able to prevent a very dissatisfactory connectivity for the user.

Provided the above, in the NANCY project, we have devised, designed and implemented tools for predicting two important network performance metrics. Firstly, network outage events, whereby users may even, in severe cases, lose their connection to the network, in the exact same vein as in power outages, and generally the rate will fall below an acceptable threshold; secondly, user throughput, which measures what users receive as service rate (i.e., bit rate) at the application layer. The idea is that with this information at hand, the user device can automate dynamic decisions for better network connectivity and prevent network outage events. These supervised learning schemes are aligned with NANCY objectives encompassing AI tools along with MEC, not only anticipating these network events but also aiding in better-distributed resource management and ensuring adherence to Service-Level Agreement (SLA) requirements. Overall, throughput prediction and outage probability prediction efficiently span the three service categories, namely, enhanced mobile broadband (eMBB), demanding high data rates in the previous massive connectivity context, as well as ultra-reliable low latency communications (uRLLC). Thus, throughput prediction and network outage probability prediction could enable intelligent automated orchestration and also promote user experience transparency at the edge and network convergence. In the big picture, both the presented tools belong to AI and Analytics groups and components.

Throughput Forecasting Service (TFS) and Artificial Intelligence Network Quality Module (AINQM)

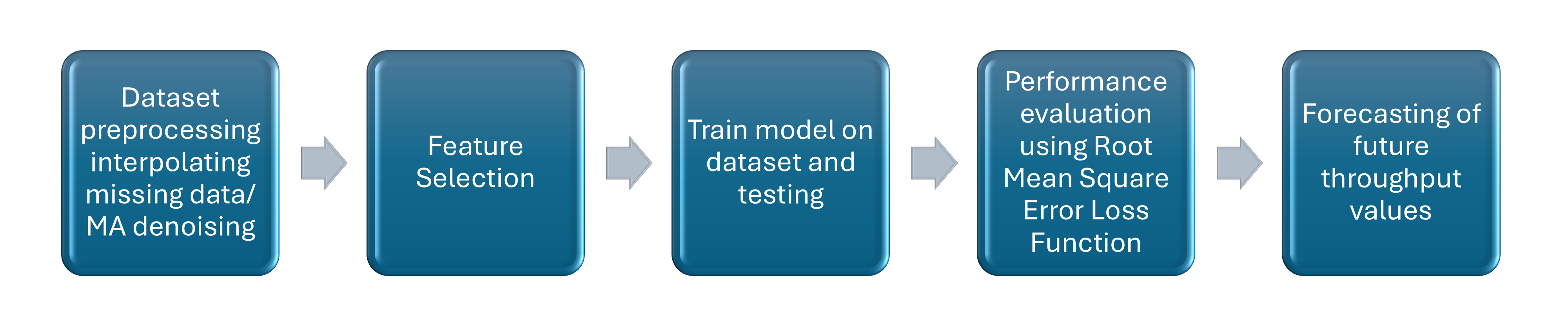

Specifically, the module developed for forecasting throughput is an AI model trained with data belonging to the early commercial days of 5G (Lumos 5G). These diverse variables (or features), apart from throughput, took into consideration location-related parameters, mobility-related (such as moving speed) and signal strength-related features. Notwithstanding the high quality of the final model, it did take a while to get there, involving pre-processing, with proper filtering and linear interpolation, to tackle not available (NA) values. It is noteworthy that the model itself is a state-of-the-art RNN Long Short-Term Memory (LSTM) model, complete with a drop-out layer, to tackle the overfitting of the AI model to its training data and thus bolster generalization and reinforce its overall robustness for use elsewhere.

Figure 2. Steps for creating the SOTA LSTM Model for our TFS tool.

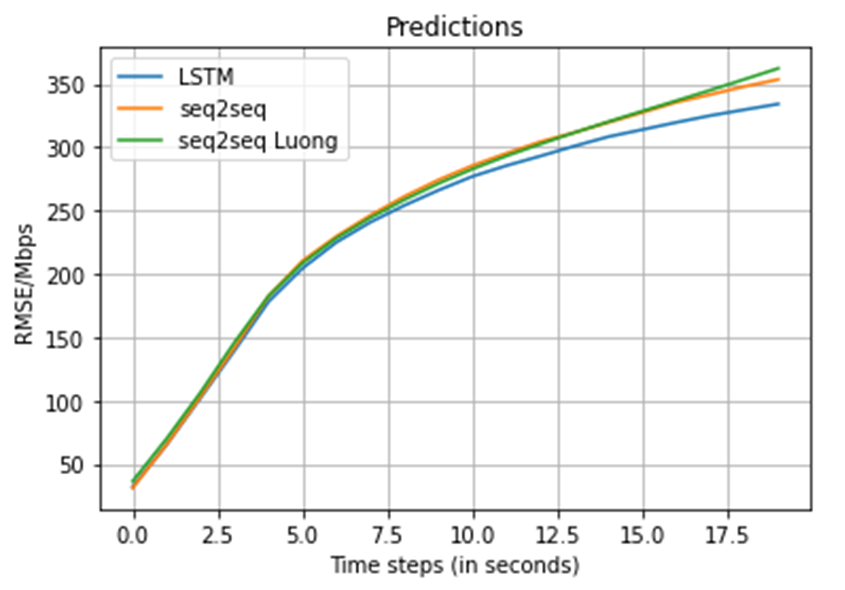

The second module was developed and focused on predicting the probability of an outage (specifically, rate outage), which corresponds to the rate falling below a predefined threshold. In the context of NANCY, various theoretical thresholds, derived from up-to-date literature, were considered and put to test, such as uRLLC and mMTC service thresholds. However, eventually, it was the concept of a threshold stemming from experimentation –namely, the quantile threshold – of our dataset which became the one to handpick, as it delivered the best results. Overall, this delicate, state-of-the-art machine learning algorithm manages to provide a very accurate and robust tool, where a network outage can be successfully predicted prior to making the proper decisions. An AI network analytics tool like this can be of much utility to a vastly diverse interoperable set of components such as the one making up the NANCY platform.

Figure 3. Our throughput forecasts can be used for Analytics and decision-making (resource orchestration, AP connections switch, etc.).

Epilogue: A Glance Beyond

The development of the above-mentioned tools is aligned with NANCY goals, namely, applying AI tools to enhance model performance in the context of a decentralized, reliable and secure architecture; that is, in line with the networking developments inherent to 5G design. They constitute an optimization step, which, when combined with MEC, can assist in anticipating network events related to performance-improving coverage and efficiently allocating resources at the network edge. Finally, the throughput forecasting and outage probability prediction module will be further validated in the remainder of the project based on results produced by additional datasets. This complementary training and validation is set to empower the models further via NANCY-exclusive generated data and augment its uniqueness and overall quality.