Authors: Konstantinos Kyranou

Organization: Sidroco Holdings Ltd

In today’s AI-driven world, building powerful machine learning (ML) models isn’t enough; we need to understand why they make decisions. As AI permeates high-stakes domains like cybersecurity and network management, the demand for explainable AI (xAI) has never been more critical. In the framework of the NANCY project, SIDROCO designed and developed an innovative concept combining foundational models with explainability tailored to the specific needs of cybersecurity threat detection in telecommunications.

What are LLMs?

A Large Language Model (LLM) is an advanced AI system built using deep learning techniques and trained on massive volumes of text data. Leveraging transformer-based architectures, LLMs excel at understanding and generating human-like language with strong contextual awareness and fluency. They can perform a variety of natural language processing tasks, such as summarizing text, translating languages, answering questions, and generating content across different domains.

What are SHAP Values?

SHAP (SHapley Additive exPlanations) values help us understand how ML models make decisions. They show how much each feature (or input) contributes to a specific prediction.

SHAP is based on a concept from game theory called Shapley values, which fairly divide a “payout” (in this case, the model’s prediction) among all the features. It compares each prediction to a baseline (like the average prediction) and shows how each feature pushed the prediction up or down. SHAP works with any ML model (model agnostic) and gives two types of insights:

- Local: Explains individual predictions.

- Global: Shows overall feature importance across all predictions

This makes SHAP a powerful tool for making models more transparent and easier to trust.

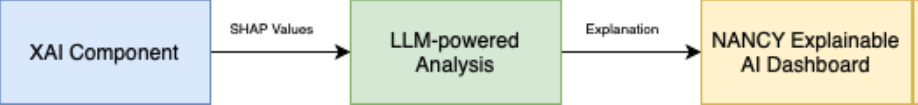

Tools like NANCY’s LLM explainer combine SHAP with large language models (LLMs) to create clear, human-readable explanations. This is especially useful in complex fields like cybersecurity and network management, where understanding model decisions is critical.

Why It Works

- Human-Readable Output: SHAP gives the math, but not always the meaning. LLMs turn complex outputs into clear, contextual explanations, perfect for business stakeholders and domain experts.

- Domain-Aware Intelligence: Instead of generic summaries, NANCY uses Mistral-7B-cybersecurity-rules1, a fine-tuned LLM for cybersecurity. This means it doesn’t just say “this feature was important”, it explains why it matters in real-world security scenarios.

- Scalable & Automated: The system can generate thousands of localized explanations at once, without human intervention. That’s critical when dealing with massive network datasets.

How it works

The NANCY LLM explainer is designed around a structured three-stage pipeline that transforms complex ML predictions into clear, natural language explanations.

In the first stage, the system uses ML models to analyze data and generate SHAP values for each prediction. These values provide detailed insights into which features contributed to each outcome, both at the global model level and for individual instances.

In stage two, the data structuring stage, SHAP values are organized into a structured format that includes each feature’s name, its importance score, and relevant contextual details about the prediction. This structured data serves as a clean and consistent input for the language model, ensuring that all necessary information is available for interpretation.

In the final stage, a domain-specific large language model, fine-tuned for cybersecurity scenarios, takes over. It interprets the structured SHAP data and generates natural language explanations. The prompts provided to the model are carefully engineered to guide it in producing explanations that are clear and technically accurate. The model acts as a virtual cybersecurity analyst, breaking down the model’s reasoning in terms that align with real-world scenarios and user needs.

To maintain consistency and relevance, the system emphasizes the most important features to ensure explanations remain concise and within processing limits. Structured prompting and formatting rules help the language model generate reliable and uniform outputs across different inputs and use cases.

Conclusion

We’re entering a new era where explainability isn’t a luxury, it’s a necessity. By combining the rigor of SHAP with the language fluency of LLMs, NANCY is not only accurate but also understandable. This fusion doesn’t just tell us what the model did; it tells us why it mattered.