Authors: Dimitris Manolopoulos

Organization: UBITECH

Maestro is a geo-distributed service orchestrator built to render end-to-end service lifecycle management across heterogeneous domains simple, secure, and standards-based. To this end, Maestro greatly facilitates the complex NANCY ecosystem, which is based on three integrated pillars, i.e., Mobile Edge Computing, AI, and blockchain. At its core Maestro provides (a) integration with standardized OSS systems leveraging TMF service and resource management APIs, for domain-level service management, (b) standardized peering with multiple OSS systems to manage end-to-end services across multiple domains, (c) a secure integration fabric for establishing on-the-fly encrypted service mesh across administrative domains, and (d) a modern user portal for rendering all these functionalities easy to use. These capabilities make Maestro well-suited to onboard NANCY services that span across the cloud continuum, i.e., from central cloud domains to private distributed MEC nodes and operator RAN slices, while abstracting service packaging and platform differences from the service providers.

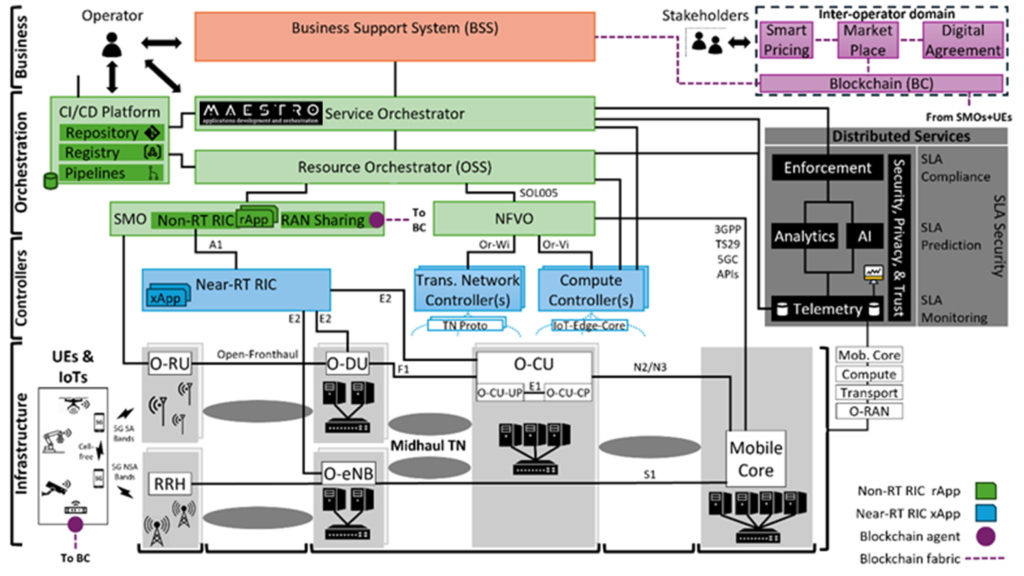

Figure 1: Functional and deployment view of the NANCY architecture

Figure 1: Functional and deployment view of the NANCY architecture

Why Maestro matters for NANCY (short version)

- Cross-domain secure connectivity: Maestro establishes encrypted links between components deployed across different testbeds/domains (remove manual VPN pain). This is essential for B-RAN experiments where controllers, RICs, and ledger nodes live in different administrative domains.

- TMF-based northbound model: Maestro’s NBIs (include product/service/resource catalogues, ordering, and inventory capabilities) enable NANCY to expose resource-as-a-service using standard contracts, simplifying orchestration across legacy BSS/OSS-like systems.

- Kubernetes + heterogeneous runtime support: Maestro already supports multiform Kubernetes flavours ordering (via OpenSlice), enabling the CI/CD and runtime scenarios described in NANCY D6.1 and D4.2.

How Maestro fits into the NANCY architecture (technical map)

- Service definition → Maestro portal / NBI: Service packages (microservices, VNFs, xApps/rApps) are registered in Maestro’s catalog. Maestro expresses resources and network requirements using TMF models so downstream systems (i.e., OSS) can consume them with no bespoke adapters. This directly supports the NANCY orchestration architecture and service lifecycle flows defined in D3.1.

- Peering to OSS / OpenSlice → instantiate compute + 5G connectivity: Maestro uses TMF ordering APIs to request specific compute (K8s flavours) and 5G connectivity via a well-tested ETSI OpenSlice integration. This is the production path for instantiating MEC nodes and private 5G slices described throughout D4.2 (Maestro + OSS interactions).

- Runtime telemetry & CI/CD: Maestro surfaces telemetry and logs for each service instance and integrates with CI/CD pipelines (Maestro Portal + backend), enabling continuous delivery of AI models (AI Virtualizer, self-evolving model repo) and resource elasticity experiments (MADRL/PHaul/SCHED_DEADLINE) in controlled testbeds as per D4.2 and validated via D6.1 testbeds.

Concrete integration blueprint (what engineers will actually do)

- Model services as TMF products — represent each NANCY service (e.g., Near-RT RIC xApp + data plane function + ledger client) as a TMF service offered by a Maestro catalog, thus lifecycle actions (order, activation, modification, termination) map cleanly to the underlying resource-facing capabilities of the infrastructure.

- Establish domain connectors — deploy a lightweight domain agent in each testbed to manage on-the-fly encrypted tunnels between Maestro and critical infrastructure services, such as compute clusters. This follows the testbed instantiation patterns in D6.1 and the Slice Manager–Maestro controls described in D4.2.

- Orchestrate resource elasticity flows — wire MADRL agents (computational elasticity), PHaul (network path allocation), and SCHED_DEADLINE control hooks into Maestro’s lifecycle hooks: when a MADRL agent requests vertical scaling, Maestro invokes a TMF Inventory API call for the target service, which ends up being translated to resource scaling commands to the underlying cluster. Telemetry loops feed back to the AI model registry for online retraining. This operational loop is grounded in the elasticity techniques from D4.2 and the orchestration control loop in D3.1.

- Testbed validation with CI/CD — via pipelines that build vertical sector service components, package them into deployable units, and pin them on relevant catalogues in the Maestro Marketplace. These pipelines then spawn canary deployments across demo/in-lab clusters (e.g., Greek indoor or outdoor testbeds) described in D6.1, monitor KPI target metrics (e.g., latency, throughput), and trigger rollback or scale actions via Maestro.

Practical tips & pitfalls to watch

• Model the network needs explicitly: include slice SLAs and traffic profiles in the service descriptors, so Maestro (and underlying OSS) can pick the right 5G connectivity flavour and MEC placement. This prevents surprises during runtime elasticity experiments (PHaul / MADRL).

• Issue modular service orders: prefer composition of primitive services (compute + network + end user) rather than monolithic service entries to allow Maestro to mix/match deployment targets across diverse testbeds (seen in D6.1).

• Secure your deployment via secrets: Maestro integrates with HashiCorp Vault for secret lifecycle management. Users may leverage this integration to store encrypted secrets, such as edger keys and PQC credentials, thus avoiding hardcoding these secrets into the service packages.

Closing — what success looks like

A successful Maestro integration in NANCY delivers repeatable, secure cross-domain deployments: AI-driven elasticity experiments (MADRL, PHaul), blockchain validator placement strategies, and MEC-backed ultra-low latency VNFs can be provisioned from the same portal/catalog and validated across multiple testbeds with built-in telemetry capabilities. The technical building blocks and interfaces are already aligned: Maestro’s TMF NBIs and secure peering solve the multi-domain orchestration problem, while the NANCY deliverables provide the concrete elasticity and security recipes to use those capabilities in real experiments.